The NTU HCI Lab explores future user interfaces at the intersection of human-computer interaction (HCI), artificial intelligence (AI), design, psychology, and VR/AR/mobile/wearable systems. Our work has been featured by Discovery Channel, Engadget, EE Times, New Scientist, and more. It is directed by Prof. Mike Y. Chen and Prof. Lung-Pan Cheng.

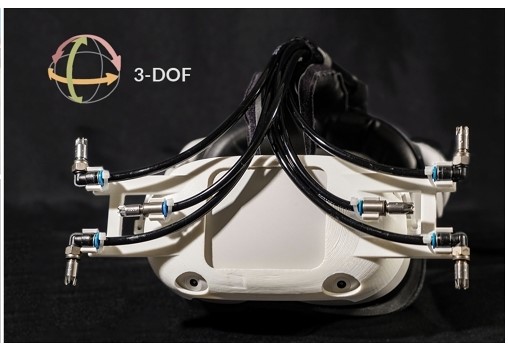

TurnAhead

3-DoF rotational haptic cues to the head to improve first-person viewing (FPV) experiences.

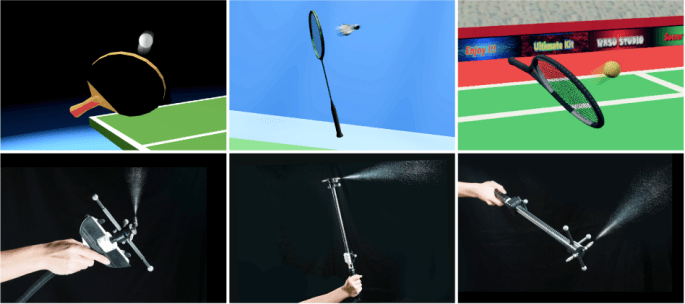

AirRacket

Perceptual force feedback design of air propulsion jets to improve the haptic experience of virtual racket sports.

MotionRing

Creating Illusory Tactile Motion around the Head using 360° Vibrotactile Headbands

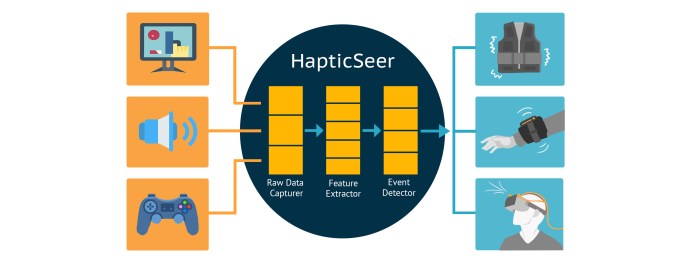

HapticSeer

A multi-channel, black-box, platform-agnostic approach to detecting game events for real-time haptic feedback.

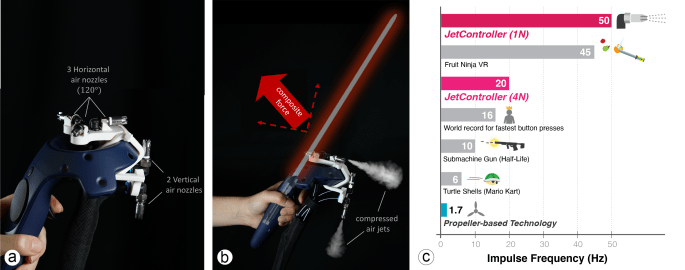

JetController

A novel high-speed 3-DoF ungrounded force feedback technology capable of supporting high-speed game events.

HeadBlaster

A wearable approach to simulating motion perception using head-mounted air propulsion jets

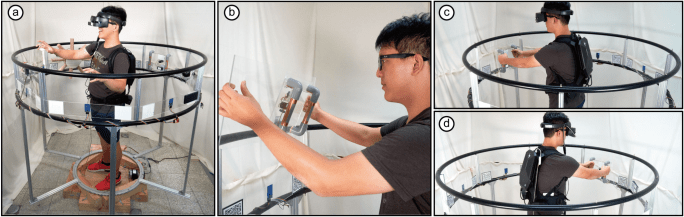

Haptic-go-round

A Surrounding Platform for Encounter-type Haptics in Virtual Reality Experiences

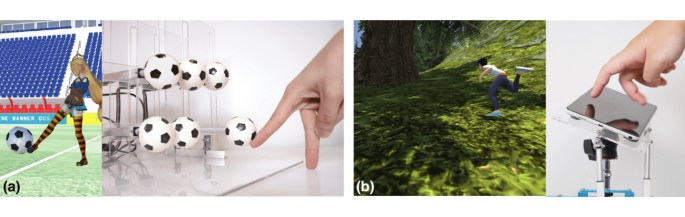

Miniature Haptics

Experiencing Haptic Feedback through Hand-based and Embodied Avatars

WalkingVibe

Reducing Virtual Reality Sickness and Improving Realism while Walking in VR using Unobtrusive Head-mounted Vibrotactile Feedback

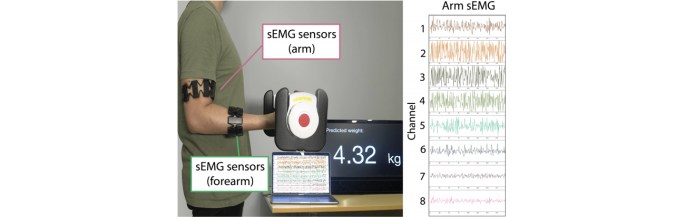

MuscleSense

Exploring Weight Sensing using Wearable Surface Electromyography (sEMG)

PhantomLegs

Reducing Virtual Reality Sickness Using Head-Worn Haptic Devices

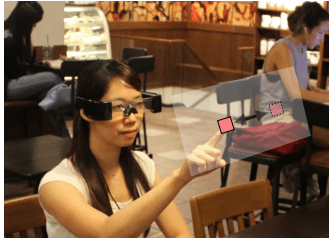

PeriText

Utilizing Peripheral Vision for Reading Text on Augmented Reality Smart Glasses

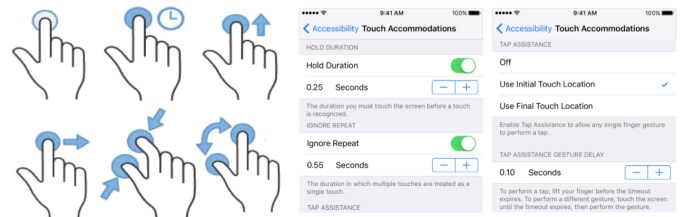

PersonalTouch

Improving Touchscreen Usability by Personalizing Accessibility Settings based on Individual User’s Touchscreen Interaction

ARPilot

Designing and Investigating AR ShootingInterfaces on Mobile Devices for Drone Videography

SpeechBubbles

Enhancing Captioning Experiences forDeaf and Hard-of-Hearing People in Group Conversations

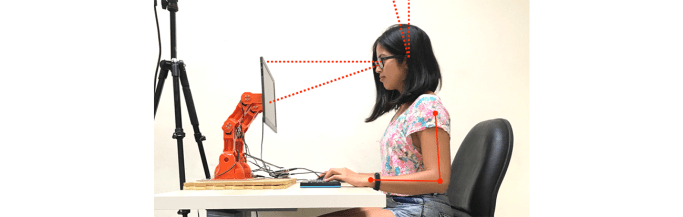

ActiveErgo

Automatic and Personalized Ergonomicsusing Self-actuating Furniture

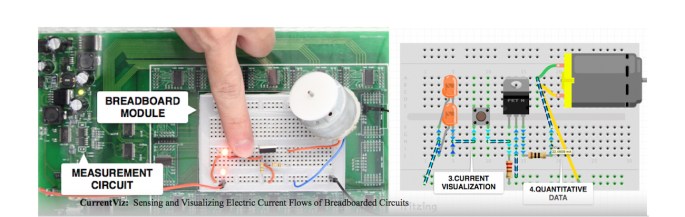

CurrentViz

Sensing and Visualizing Electric Current Flows of Breadboarded Circuits

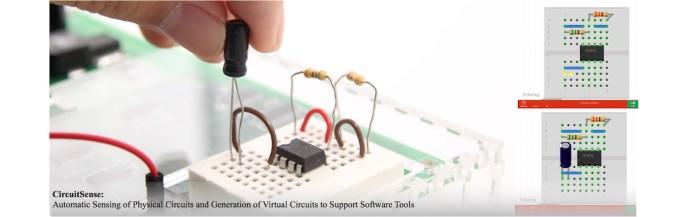

CircuitSense

Automatic Sensing of Physical Circuits and Generation of Virtual Circuits to Support Software Tools

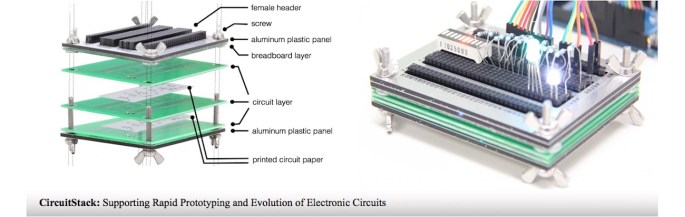

CircuitStack

Supporting Rapid Prototyping and Evolution of Electronic Circuits

Nail+

sensing fingernail deformation to detect finger force touch interactions on rigid surfaces

User-Defined Game Input

User-Defined Game Input for Smart Glasses in Public Space

Backhand

Sensing Hand Gestures via Back of the Hand

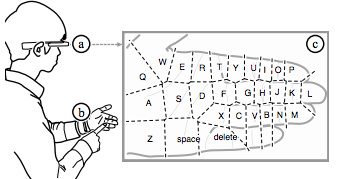

PalmType

Using Palms as Keyboards for Smart Glasses

iGrasp

grasp-based adaptive keyboard for mobile devices

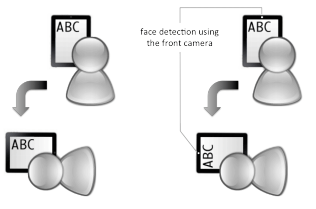

iRotate

Automatic Screen Rotation based on Face Orientation