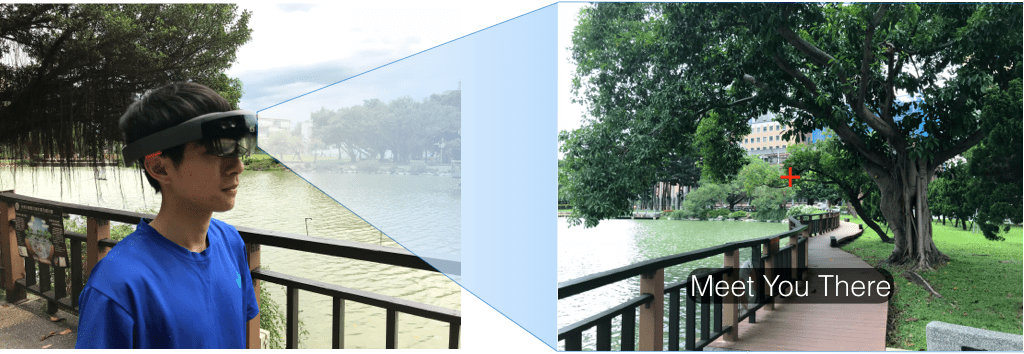

Virtual Reality (VR) sickness occurs when exposure to a virtual environment causes symptoms that are similar to motion sickness, and has been one of the major user experience barriers of VR. To reduce VR sickness, prior work has explored dynamic field-of-view modification and galvanic vestibular stimulation (GVS) that recou-ples the visual and vestibular systems. We propose a new approach to reduce VR sickness, called PhantomLegs, that applies alternating haptic cues that are synchronized to users’ footsteps in VR. Our prototype consists of two servos with padded swing arms, one set on each side of the head, that lightly taps the head as users walk in VR. We conducted a three-session, multi-day user study with 30 participants to evaluate its effects as users navigate through a VR environment while physically being seated. Results show that our approach significantly reduces VR sickness during the initial exposure while remaining comfortable to users.

PhantomLegs: Reducing Virtual Reality Sickness Using Head-Worn Haptic Devices

S. Liu, N. Yu, L. Chan, Y. Peng, W. Sun and M. Y. Chen, “PhantomLegs: Reducing Virtual Reality Sickness Using Head-Worn Haptic Devices,” 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 2019, pp. 817-826.

DOI: https://doi.org/10.1109/VR.2019.8798158